The Marcenko Pastur Theorem

import numpy as np, pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

# -Generate Probability Density Function as per Marcenko Pastur Theorem, for random process with zero mean, and specified variance

def mpPDF(var,q,pts):

# Var - Variance of the underlying process. Different variance will generate different PDF

#q = T/N T is #observations, N is #Independent Variables

#pts - How granularly you want to plot eigen values from Min Eigen Value to Max Eigen Value

eMax = var*(1+((1./q)**.5))**2 # Max Eigen Value

eMin = var*(1-((1./q)**.5))**2 # Min Eigen Value

eVal = np.linspace(eMin,eMax,pts) # Divide space b/w Min & Max Eigen into equally spaced intervals

pdf = (q/(2*np.pi*eVal*var))*(((eMax-eVal)*(eVal-eMin))**.5) # PDF as per Theorem

pdf = pd.Series(pdf,index=eVal) # Use Pandas to store result as series, index with Eigen value

return pdf # Return the Probability Density Function

def gauss_gen(s):

x = np.random.normal(scale=s,size=(10000,1000))

return x

gdata0 = gauss_gen((1.)**.5)

gdata1 = gauss_gen((1.2)**.5)

gdata2 = gauss_gen((1.1)**.5)

# Generating the

mppdf0 = mpPDF(var=1.,q=gdata0.shape[0]/float(gdata0.shape[1]),pts=1000)

mppdf1 = mpPDF(var=1.2,q=gdata1.shape[0]/float(gdata1.shape[1]),pts=1000)

mppdf2 = mpPDF(var=1.1,q=gdata2.shape[0]/float(gdata2.shape[1]),pts=1000)

fig, ax = plt.subplots()

plt.plot(mppdf0,'r',label='Variance 1')

plt.plot(mppdf1,'g',label='Variance 2')

plt.plot(mppdf2,'b',label='Variance 0.5')

ax.set_xlabel('Eigen Value')

ax.set_ylabel('Probability of Eigen Value')

ax.legend()

plt.show()

def getPCA(y):

eVal, eVec = np.linalg.eigh(y) # Calculate eigen values and eigen vectors of the correlation matrix

indices = eVal.argsort()[::-1] #Identify the indices that correspond to eigen values in decreasing order, if 1 (values in increasing order)

eVal, eVec = eVal[indices], eVec[:,indices] #Just specifying the indices, means you can re-order eigen values & eigen vectors

eVal = np.diagflat(eVal) #It places the eigen values in a diagonal matrix

return eVal, eVec

# Run code block for the different input data variants

eVal0, eVec0 = getPCA(np.corrcoef(gdata0,rowvar=0)) # Calculate the correlation coefficient, if rowvar =1 (rows contain independent variables), if rowvar = 0 (columns contain independent variables)

eVal1, eVec1 = getPCA(np.corrcoef(gdata1,rowvar=0))

eVal2, eVec2 = getPCA(np.corrcoef(gdata2,rowvar=0))

# Plot the eigen values

print(eVal0)

print(eVal1)

print(eVal2)

#sns.distplot(eVal0,label='Variance 1')

#sns.distplot(eVal1,label='Variance 2')

#sns.distplot(eVal2,label='Variance 0.5')

#plt.legend()

#plt.show()

# Calculate the Kernel PDE

from sklearn.neighbors.kde import KernelDensity

def fitKDE(obs, bWidth = .25, kernel='gaussian',x=None):

if len(obs.shape) == 1: obs = obs.reshape(-1, 1)

kde = KernelDensity(kernel=kernel, bandwidth=bWidth).fit(obs)

if x is None: x = np.unique(obs).reshape(-1, 1)

if len(x.shape) == 1: x = x.reshape(-1, 1)

logProb = kde.score_samples(x) # log(density)

pdf = pd.Series(np.exp(logProb), index=x.flatten())

return pdf

## Run Kernel PDE for different input distributions

gpdf0 = fitKDE(np.diag(eVal0),bWidth=.01)

gpdf1 = fitKDE(np.diag(eVal1),bWidth=.01)

gpdf2 = fitKDE(np.diag(eVal2),bWidth=.01)

# Plot the two distributions

import matplotlib.pyplot as plt

fig, ax1 = plt.subplots()

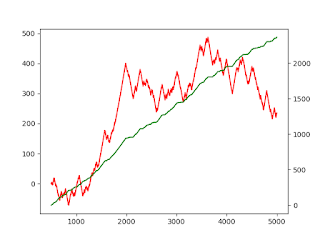

plt.plot(mppdf0,'r',label='Template PDF of Covariance matrix - Variance 1')

plt.plot(gpdf0,'g',label='Actual PDF of Covariance matrix - Variance 1')

ax1.set_xlabel('Eigen Value')

ax1.set_ylabel('Probability of Eigen Value')

ax1.legend()

plt.show()

fig, ax2 = plt.subplots()

plt.plot(mppdf1,'r',label='Template PDF of Covariance matrix - Variance 2')

plt.plot(gpdf1,'g',label='Actual PDF of Covariance matrix - Variance 2')

ax2.set_xlabel('Eigen Value')

ax2.set_ylabel('Probability of Eigen Value')

ax2.legend()

plt.show()

fig, ax3 = plt.subplots()

plt.plot(mppdf2,'r',label='Template PDF of Covariance matrix - Variance 0.5')

plt.plot(gpdf2,'g',label='Actual PDF of Covariance matrix - Variance 0.5')

ax3.set_xlabel('Eigen Value')

ax3.set_ylabel('Probability of Eigen Value')

ax3.legend()

plt.show()

Comments

Post a Comment