PyCharm Productivity Hacks

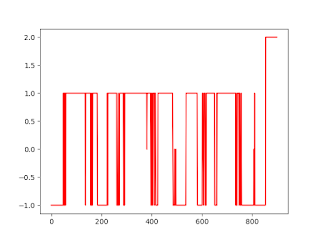

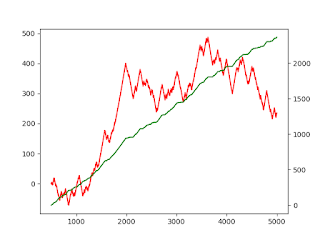

This post will describe some hacks to improve your productivity on Machine Learning projects. This post is scoped around Python development in the PyCharm IDE. Scientific Mode: This feature is only available on the Professional Version of PyCharm. Code blocks: Using # %% at the start of a code block, creates a cell, similar to a cell in Jupyter. You will then have access to a dedicated play button for that cell, allowing you to prototype commands quickly without having to run the entire code block. This adds value when you have a load function in your code, which will take up time to run View variable: You are able to view a sample of the data you have just loaded, and are able to see a plot of that data. This feature is useful during the data exploration stage, as you are trying to build hypothesis. In-line visualisation: You can see the results of cell commands such as seaborn or matplotlib, in line, just by running a cell. Not having to run the entire program, leading to ...